As companies are becoming more reliant upon data to run and grow their business, and want to execute Machine learning use cases that require a solid data infrastructure, the role of a data engineer is getting increasingly important.

This hiring guide will cover all to know about data engineering, why it has become such a popular role, what a data engineer does, and how to hire your next data engineer successfully.

About Data Engineering

Data engineering is the process of developing and constructing large-scale data collection, storage, and analysis systems. It's a vast field that is applied in almost every industry. Data engineering teams gather and manage data on large scales, using their knowledge and the right technologies to ensure that the data is in a useful state by the time it reaches data scientists, analysts, and other consumers.

What data engineers do is build distributed systems that collect, handle, and convert raw data into usable information for data science teams, machine learning, and business intelligence experts to later use in various applications.

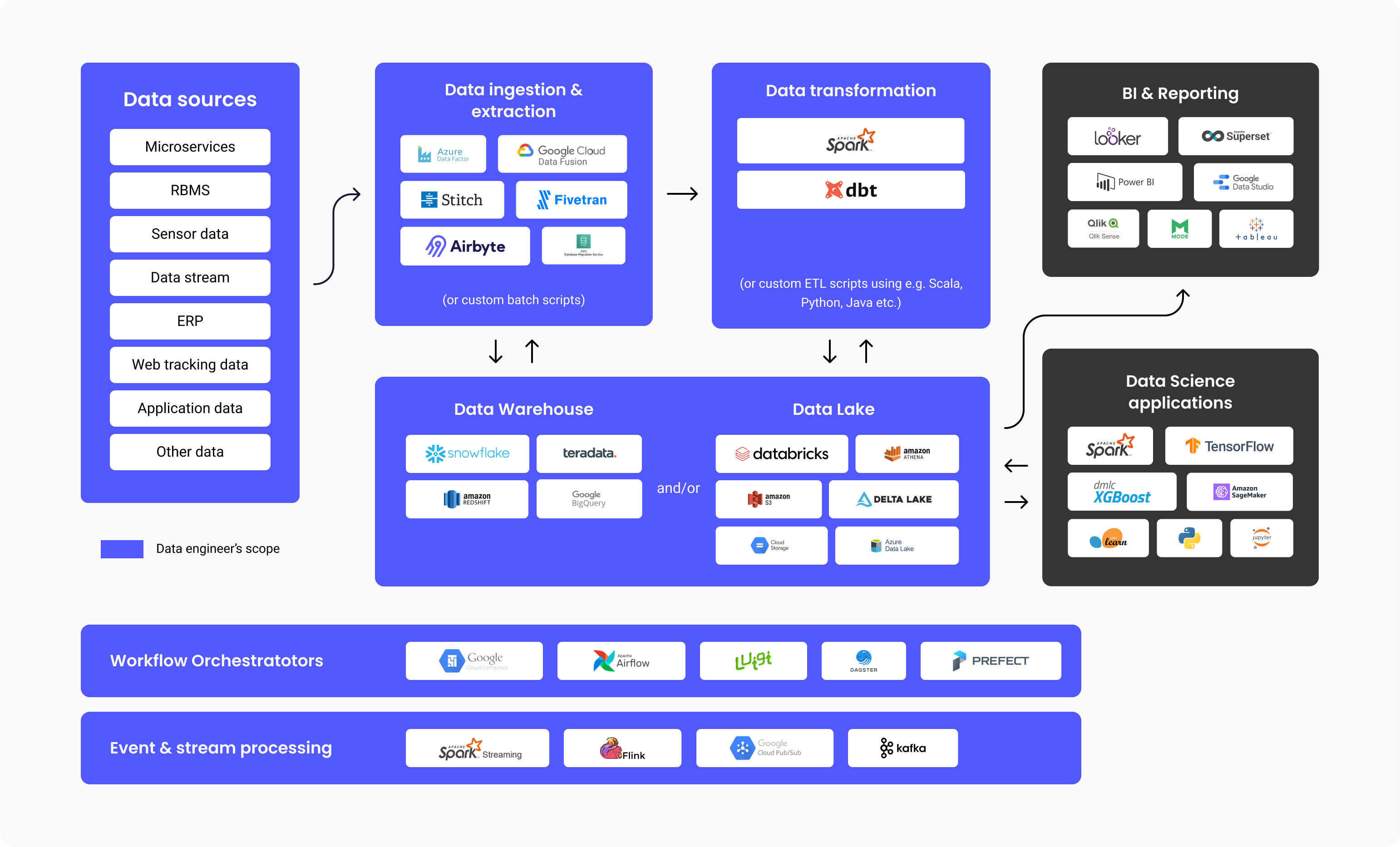

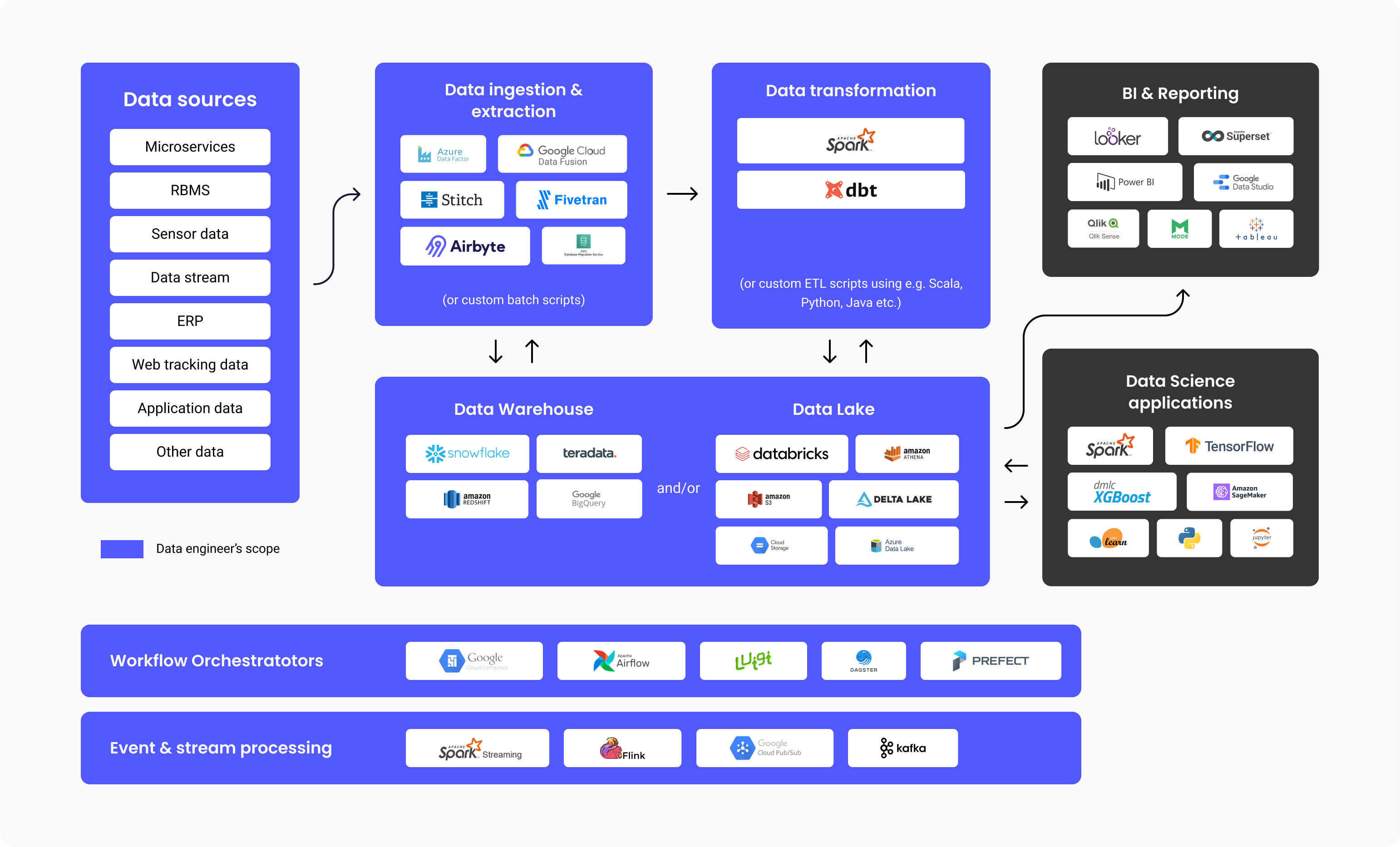

Data engineers design and build data pipelines that transform and transport large pieces of information into a highly usable format by the time it reaches the end-users. These pipelines typically collect data from various sources and store it in a single Data warehousing or Data lake repository that represents it uniformly as a single source of information.

The above diagram illustrates the workflow of a data platform, technologies commonly used in each step, and the scope of responsibilities for a data engineer. As you can see, a lot of work goes into data engineering before it is consumed by BI analysts or Data Scientists. Studies have shown that a staggering 80% of the effort spent on data-driven projects is about data engineering to get data ready to use while 20% is about creating value out of this data.

According to the Stack Overflow survey of 2022, the salaries of data engineers come down to an annual sum of $79.983 on average, which makes this vocation rise in the upper part of salary charts.

Why and when do you need to hire a Data Engineer?

You need to hire a data engineer if you are looking to build applications or data platforms (data warehouse, data lakes) that requires you to retrieve and consolidate data coming from various sources.

This need typically arises when either you have a Machine learning use case that requires vast amounts of data or when you are in need of a centralized repository that allows you to store all your structured and unstructured data, also known as a Data Lake or Data Warehouse.

Types of data engineers

Big Data-centric (data) engineer

A Big data engineer focuses on handling large datasets. The storage of the data is typically in distributed file systems or object storage systems rather than relational databases. To handle the amounts of data, a Big Data engineer uses data processing frameworks such as Spark, MapReduce, or Flink. Even though SQL is frequently used, most of the programming is done in languages such as Scala, Python, or Java, making the role more similar to a backend developer. A Big Data Engineer typically uses the ETL (Extract, Transform, Load) process and builds batch and streaming data pipelines which are typically orchestrated by tools such as Apache Airflow.

Database or Data Warehouse-centric data engineer

A database or Data Warehouse-centric data engineer focuses primarily on extracting and transforming structured data from relational databases The process here includes using a standard database management system, table-driven logic and methods, database servers and stored procedures.

What does a data engineer do day-to-day?

The responsibilities and tasks of a data engineer typically include:

- Identifying and implementing re-designs of infrastructure for scalability

- Optimizing data delivery

- Assembling large sets of data

- Building infrastructure for data extraction and loading

- Creating analytical tools for the data pipeline and providing insight for operational efficiency

We have also asked Mehmet Ozan Ünal, a data engineer at Proxify, about the day-to-day job tasks that this position entails, and he stated:

“Data engineers usually create ETL pipelines, design schemas, and monitor and schedule pipelines. Another crucial responsibility is designing and formatting the data infrastructures for the company. A data engineer should link the connection between data sources (for example SAP), also IoT (Internet of Things), and app data) and data consumers (data analysts, data scientists, business people, machine learning pipelines, business intelligence, and reporting systems).”

Mehmet Ozan Ünal

Mehmet Ozan Ünal

In a nutshell, what a data engineer does is:

- Developing and maintaining data platforms

- In-depth analysis of raw data

- Improving the quality and efficiency of all data

- Developing and testing architectures for extracting and transforming data

- Building data pipelines

- Building algorithms to process data efficiently

- Researching methods for data reliability

- Support in the development of analytical tools

Interviewing a Data Engineer

Essential technologies and programming languages for a data engineer

Mehmet lists the top technologies a data engineer must know:

- Programming languages: SQL and either Python, Scala, or Java

- Tools and systems: Kafka, Spark, Apache Airflow (for data pipeline orchestration), Transactional Databases (MySQL, PostgreSQL), data formats (Parquet, Protobuf, Avro)Coding: Version Management (Git), Algorithms, and Data Structures

- Containerization: CI/CD systems and Docker

- Cloud: Azure, GCP, or AWS

There are specific tools that make data engineering more efficient. The top five are listed below:

1. Data Warehouses

- Amazon Redshift: A cloud data warehouse for easy data setup and scaling.

- Google Big Query: A cloud data warehouse great for smaller starting businesses that want scaling.

- Snowflake: A SaaS that’s fully managed, providing one platform for multiple purposes like data engineering, data lakes, data warehousing, data app development, and more.

2. Data ingestion and extraction

- Apache Spark: An analytics engine of open source used to process data on a large scale. It is an open-source project but is also available as a web-based distribution created by the founders of Spark called Databricks.

- Google Cloud Data Fusion: A web UI for the creation of integration solutions for scalable data, in order to prepare and transform data, with no need for infrastructure altering.

- Azure Data Factory: An ETL (Extract, Transform, Load) service of Azure for the creation of dataflows and integration data with no need of server usage.

- Dbt: Data Build Tool, a tool for transforming data directly in the warehouse, through the usage of code for the overall process.

4. Data Lake and Lakehouse

- Databricks: A platform that is unified, open, and used for all data, precisely for scheduled and interactive data analyses.

- Amazon S3: A service for object-storing and scalability, performance, and security of data that is stored as an object format.

- Google Cloud storage: Google service for storing objects, and data in the Google cloud.

- Azure Data Lake: As part of Microsoft, the Azure Data Lake is a public platform with services and products for the analytics of big data.

5. Workflow orchestrators

- Apache Airflow: An open-source WMS (workflow management system) tool for organizing, scheduling, and monitoring workflows.

- Luigi: A Python tool package for building, scheduling and orchestrating pipelines.

6. Event and stream processing

- Google Cloud Pub/Sub: Messaging with quick and immediate alerts and notifications, enabling parallel processing and architectures that are event-driven.

- Apache Kafka: An event store and open-source platform for stream-processing actions.

Technical skills of a Data Engineer

A data engineer has to have these crucial technical skills:

- Data collecting – handling the volume of data, but also the variety and velocity.

- Coding – proficiency in programming is vital, so they need an excellent grasp of either Scala, Java, or Python which are the most commonly used languages for Data engineering systems and frameworks.

- Data transforming – the data engineer has to be well-versed in transforming, for example, cleansing (e.g. removing duplicates), joining data together, and aggregating the data.

- Data warehousing – knows how to divide the DW into tiers, and create fact tables by combining tables and aggregating them to make reporting more efficient.

- Data analyzing – knows how to draw insights from a dataset, especially when it comes to quality checks, e.g. distribution of data, duplicate checks, etc.

Additionally, Mehmet said:

“A good data engineer has to have hands-on knowledge and experience with coding and data warehousing. Along with this, basic machine learning, basic data modeling, and Linux and shell scripting.”

Top interview questions (and answers) for assessing Data Engineers

One excellent approach to assess the candidate's technical abilities is to ask Data engineering-specific questions that will help you separate the wheat from the chaff. To test and evaluate the skills and expertise of a data engineer and to find the best candidate, you might want to enquire about:

1. Can you explain more about the Reducer and its methods?

Expected answer: In the Hadoop ecosystem, the Reducer is a component of the MapReduce programming model that performs the data aggregation and summarization phase. Reducers receive the intermediate key-value pairs produced by the Mappers, process and combine them based on the keys, and produce the final output.

The Reducer operates on a subset of key-value pairs that share the same key. Its main responsibility is to process and combine the values associated with each unique key, performing various computations or transformations to generate the final output.

Here are the main methods in the Reducer class within the Hadoop ecosystem:

- setup(): The setup() method is called once at the beginning of each Reducer task. It is used to initialize any resources or configurations required by the Reducer.

- reduce(): The reduce() method is the core processing method of the Reducer class. It is invoked once for each unique key and a list of values associated with that key. Developers must override this method and implement custom logic for aggregating or processing the values. The reduce() method typically iterates over the values and performs computations, summaries, or transformations to generate the final output for each key.

The method signature for reduce() is as follows:

void reduce(KEYIN key, Iterable<VALUEIN> values, Context context)

Here, "key" represents the unique key associated with the values, "values" is an iterable collection of values corresponding to the key, and "context" provides various utilities and services for interacting with the Hadoop framework.

- cleanup(): The cleanup() method is called once at the end of each Reducer task. It is used to release resources or perform final cleanup operations before the Reducer task finishes.

The Reducer class also includes other methods inherited from the Reducer superclass, such as configuration-related methods for retrieving job configurations, status-reporting methods for updating progress, and context-related methods for accessing input/output formats and counters.

2. What is the usage of *args and **kwargs?

Expected answer: In Python *args and **kwargs serve in the function definitions to allow for variable-length argument lists.

*args specifies the total number of non-keyworded arguments that could be passed and the operations that could be performed on the Python function, and **kwargs represents a variable number of keyworded arguments. These could pass to a function that performs dictionary operations.

For example, we can use *args to make a simple multiplication function with several arguments.

def multiplyNumbers(*numbers):

product=1

for n in numbers:

product*=n

return product

print("product:",multiplyNumbers(4,4,4,4,4,4))

Output:

product:4096

**kwargs can be used to pass multiple arguments in the form of a dictionary

def whatTechTheyUse(**kwargs):

result = []

for key, value in kwargs.items():

result.append("{} uses {}".format(key, value))

return result

print(whatTechTheyUse(Google='Angular', Facebook='react', Microsoft='.NET'))

Output:

[‘Google uses Angular’, ‘Facebook uses react’, ‘Microsoft uses .NET’]

3. Compare Star Schema and Snowflake Schema.

Expected answer: Star and snowflake schemas are two multidimensional data models commonly used in data warehouses. Both offer certain benefits and downsides, and the best thing for a particular app would have to depend on some specific requirements.

- Star schema: A star schema is a simple and efficient data model commonly used for reporting and analysis. It consists of a fact table that is surrounded by several dimension tables. The fact table contains the measurements of interest, and the dimension tables provide context for the measurements. The main advantage of a star schema is its simplicity. The fact table is typically small and straightforward, and the dimension tables are normalized, which makes them easy to query. This makes star schemas very efficient for reporting and analysis. The main disadvantage of a star schema is that it can be inflexible. Adding new dimensions or measurements to the schema can be difficult if the requirements change.

- Snowflake schema: A snowflake schema is a more complex data model than a star schema. It is similar to a star schema, but the dimension tables are subdivided into smaller tables. This makes storing and managing complex data easier but makes the schema more difficult to query. The main advantage of a snowflake schema is its flexibility. Adding new dimensions or measurements to a snowflake schema is easier than a star schema. The main downside of a snowflake schema is the overall complexity. The schema can also be harder to understand and query, leading to performance problems down the road.

4. Can you list and elaborate on the various approaches to data validation?

Expected answer: Data Validation represents a process in which we ensure that the source data is accurate and shows high quality before using, importing, or processing it. Different validation types could be performed depending on the objectives or destination constraints. Validation is a type of data cleansing.

- Format Validation: Format validation involves checking if the data adheres to a specified format or structure. This can include verifying data types, lengths, patterns, or adherence to predefined standards. Examples include validating email addresses, phone numbers, social security numbers, or dates in a specific format.

- Range and Boundary Validation: Range and boundary validation involves verifying that data falls within acceptable limits. This can include checking numerical values against the specified minimum and maximum values, ensuring dates are within a specific range, or validating that values are within an expected set or domain.

- Cross-Field Validation: Cross-field validation involves validating the relationship between multiple fields in a dataset. It ensures that the combination of values across different fields is logically consistent. For example, if there are fields for start date and end date, cross-field validation would check that the end date is not earlier than the start date.

- Code or Reference Validation: Code or reference validation involves validating codes or references against predefined lists or lookup tables. It ensures that the data references valid entities, such as checking that a product code exists in a product catalog or verifying that a customer ID corresponds to an actual customer in a database.

- Business Rule Validation: Business rule validation involves checking data against specific rules or constraints. These rules are derived from business requirements or domain knowledge. Examples include checking if a customer's age meets a particular requirement, ensuring that inventory levels do not exceed capacity, or validating that a discount percentage is within a defined range.

- Completeness Validation: Completeness validation ensures that all required data elements are present. It checks if mandatory fields are filled and, if necessary, data is provided to support the desired analysis or processing. An example is validating that all required fields are populated in a customer record or verifying that essential data is not missing from a financial transaction.

- Referential Integrity Validation: Referential integrity validation ensures consistent relationships between data elements. It involves verifying that foreign keys in a database table reference valid primary keys in another table. This ensures the integrity and consistency of the data relationships.

- Data Quality Rules and Statistical Validation: Data quality rules and statistical validation involve applying statistical techniques and algorithms to identify data anomalies, outliers, or patterns that deviate from expected norms. This includes analyzing data distributions, identifying data gaps, detecting duplicates, or identifying data inconsistencies through data profiling and statistical analysis.

5. What is DAG in Apache Spark?

Expected answer: In Apache Spark, a DAG (Directed Acyclic Graph) represents a fundamental concept for the logical flow of operations and transformations in a Spark application. It captures a sequence of operations executed on a distributed dataset (RDD or DataFrame) to produce a desired output.

A DAG consists of two node types: transformation nodes and action nodes. Transformation nodes represent the operations that transform the input data into a new dataset, such as map, filter or join. Action nodes are operations that produce a final result or trigger computations, such as count, collect, or save.

The DAG represents the dependencies between these nodes, indicating the order in which transformations should be applied and the relationships between the data partitions. The DAG is built based on the operations defined in the Spark application code.

Spark improves the execution of the DAG by applying various optimizations, such as pipelining, lazy evaluation, and parallel execution. It analyzes the DAG to determine the most efficient execution plan, minimizing the data shuffling and optimizing resource utilization.

DAG provides resilience and fault tolerance, enabling Spark to recover from failures by re-executing only affected portions.

6. What is the Spark “lazy evaluation"?

Expected answer: Lazy evaluation in Spark represents a technique that delays an expression's evaluation until its value is needed. You can apply as many transformations as you want to a Spark RDD, and Spark will only execute them once you call an action.

There are several advantages of using lazy evaluation in Spark. First, it helps to improve performance. By delaying the evaluation of the expressions, Spark avoids unnecessary computations. For example, suppose you apply a transformation to an RDD that filters out all elements. In that case, Spark will load the data into memory or perform the filtering operation once you call an action.

Second, lazy evaluation helps to improve fault tolerance. If an RDD fails during the execution of a transformation, Spark can simply discard the failed RDD and continue with the next transformation. This is because Spark only evaluates the transformations once you call an action.

Finally, lazy evaluation can make Spark code easier to read and write. By deferring the evaluation of the expressions, you can break down complex operations into smaller, more manageable steps, making your code easy to debug and readable.

7. In what way is Spark different when compared to Hadoop MapReduce?

Expected answer: Spark and Hadoop MapReduce are distributed computing frameworks for processing large-scale data sets. But, there are some differences between these:

- Ease of use: Spark provides a more user-friendly and expressive programming model than Hadoop MapReduce. It offers high-level APIs in multiple languages (such as Scala, Java, Python, and R), making writing and maintaining complex data processing tasks easier. On the other hand, MapReduce requires developers to write more low-level code, which can be more challenging and time-consuming.

- Speed: Spark is significantly faster than MapReduce due to its in-memory computing capabilities. While MapReduce writes data to disk after each step, Spark keeps data in memory, reducing the need for disk I/O operations. This in-memory processing makes Spark much faster for iterative algorithms and interactive data analysis.

- Data processing model: MapReduce processes data in a two-step pipeline: map and reduce. Map tasks will filter and sort, while reducing tasks aggregate and summarize the results. On the other hand, Spark introduces the concept of Resilient Distributed Datasets (RDDs), distributed collections of objects that can be processed in parallel. RDDs allow for more complex data processing operations, such as transformations and actions, making Spark more flexible and versatile.

- Supported workloads: MapReduce is suitable for batch processing large data sets, mainly when the data access pattern is sequential and read-heavy. In addition to batch processing, Spark supports real-time stream processing, interactive queries, and machine learning workloads. Its ability to handle a variety of workloads makes it a more versatile choice.

- Built-in libraries: Spark has a rich set of built-in libraries, such as Spark SQL for SQL-based data processing, Spark Streaming for real-time data streams, MLlib for machine learning, and GraphX for graph processing. These libraries simplify development and provide high-level abstractions for different data processing tasks. In comparison, Hadoop MapReduce requires additional frameworks, like Apache Hive or Apache Pig, to provide similar functionality.

8. What is the difference between left, right, and inner join?

Expected answer: Left, Right, and Inner join all represent keywords in SQL, used for row combining in a minimum of two or more tables, and when they have a common column somewhere between them.

- Inner join

Rows are returned by inner join if a match applies to both tables; this is the most frequently used and seen join. If we assume we have two tables, customers and orders, the table for customers will have info about customers, such as an address, name, etc. The table for orders has information for orders, such as which customer placed a specific order or at which date this happened.

An inner join for these tables will return rows from the customer table only in case of a matching row for the other table (orders). Let’s say John Doe made an order; then the inner join will return a row for John Doe in the table for customers, together with the John Doe row for the order placed on the other orders table.

- Left join

A left join returns all rows from the left table, even if no matches are in the right table. This is useful when you want to see all of the data from the left table, even if there is no corresponding data in the right table.

For example, let's say we have the same two tables as before: customers and orders. A left join on the customers and orders tables would return all rows from the customers' table, even if there are no matching rows in the orders table. For example, if a customer named "Jane Doe" has never placed an order, the left join would still return the "Jane Doe" row from the customer table.

- Right join

A right join is similar to a left join, except that it returns all rows from the right table, even if there are no matches in the left table. For example, let's say we have the same two tables as before: customers and orders. A right join on the customers and orders tables would return all rows from the orders table, even if there are no matching rows in the customers' table. For example, if there is an order that was placed by a customer who is not in the customers' table, the right join would still return the row for the order from the orders table.

9. Can you define data normalization?

Expected answer: Data normalization (or also database normalization), represents a process in database design that works to remove redundancy and improve the data integrity through organizing data into logical structures. It includes breaking down a whole database into more tables and, after that, setting relationships between all of them.

Data normalization aims to minimize data duplication and ensure that each information is stored in only one place. This helps to prevent data inconsistencies, update anomalies, and logical errors that can arise when redundant data is present.

Normalization typically follows rules or normal forms, which define progressively stricter levels of organization for the data. The most commonly used normal forms are:

- First Normal Form (1NF): Ensures that each attribute (column) in a table contains only atomic (indivisible) values, eliminating repeating groups and arrays.

- Second Normal Form (2NF): Builds on 1NF by requiring that all table non-key attributes depend on the primary key. It eliminates partial dependencies, where an attribute depends on only a portion of the primary key.

- Third Normal Form (3NF): Extends 2NF by eliminating transitive dependencies. It ensures that all non-key attributes depend only on the primary key and not other non-key attributes.

Expected answer: Use a distributed processing framework like Apache Spark or Apache Flink to handle the data transformation and aggregation tasks in parallel.

Implement a message queue system (e.g., Apache Kafka) to buffer and decouple the data ingestion process from the data processing and storage components.

Utilize a cloud-based infrastructure that provides auto-scaling capabilities to handle varying data loads. Implement fault tolerance mechanisms such as data replication, checkpointing, and monitoring to ensure data integrity and availability.

11. As a data engineer, can you explain how you continuously monitor data quality?

Expected answer: Below are some of the ways to monitor data quality on an ongoing basis:

- Define data quality metrics. The first step is to define the specific metrics that will be used to measure data quality. These metrics should be relevant to the business needs and should be measurable. For example, you might define completeness, accuracy, consistency, and timeliness metrics.

- Implement data profiling. Data profiling collects information about data, such as its structure, content, and quality. This information can be used to identify potential data quality issues. Several data profiling tools are available, both commercial and open source.

- Develop data quality checks. Once you have identified potential data quality issues, you must develop data quality checks to identify and correct them. These checks can be automated or manual. For example, you might develop a check to ensure all customer records have a valid email address.

- Continuously monitor data quality. Data quality is an ongoing process. You need to monitor data quality to ensure that it remains high constantly. We can do this by using data quality metrics and data profiling tools.

- Report on data quality. It is essential to report on data quality to the business stakeholders. This will help them to understand the state of data quality and to take action to improve it.

- Respond to data quality issues. When data quality issues are identified, they need to be responded to promptly. This may involve correcting the data, removing the data, or notifying the business stakeholders.

Always include the business stakeholders in the process and use various quality data tools. Automate everything as much as possible, making data quality your top priority. For the data quality checks, try using null value checks, duplicate value checks, data type checks, range checks, and format checks.

Expected answer: When troubleshooting Spark performance issues, there are several steps you can take to identify and resolve the underlying problems. Below are popular methods of troubleshooting.

- Understand the performance goals: Clearly define your Spark application's performance goals and expectations. This includes factors such as execution time, resource utilization, and scalability. A clear understanding of the desired performance outcomes will help guide your troubleshooting efforts.

- Monitor resource utilization: Start by monitoring the resource utilization of your Spark application. Use Spark's built-in monitoring tools, such as the Spark UI or Spark History Server, to analyze metrics like CPU and memory usage, disk I/O, and network activity. Identify any resource bottlenecks that may be impacting performance.

- Review execution plan: Analyze the Spark execution plan for your application. The execution plan outlines the sequence of transformations and actions performed on the data. Check for any inefficient or redundant operations that could be optimized. Ensure that the execution plan is aligned with your intended data processing logic.

- Data skew and data partitioning: Data skew, where certain partitions have significantly more data than others, can lead to performance issues. Investigate if data skew exists by analyzing the distribution of data across partitions. Consider using data repartitioning, bucketing, or salting to distribute data and alleviate skew-related problems evenly.

- Tune data serialization: Data serialization plays a crucial role in Spark performance. Evaluate the serialization format (e.g., avro, parquet) and consider switching to a more efficient option. Additionally, optimize the serialization buffer size and ensure that only necessary data is serialized to minimize overhead.

- Memory management: Proper memory management is critical for Spark performance. Review the memory configuration settings, such as heap size, off-heap memory, and memory fraction allocated to execution and storage. Adjust these settings based on the memory requirements of your workload. Monitor garbage collection activity to detect potential memory-related bottlenecks.

- Shuffle optimization: Shuffle operations, such as groupBy, reduceByKey, or join, can be a performance bottleneck in Spark. Optimize shuffles by reducing the amount of data shuffled, tuning the shuffle buffer size, and considering techniques like broadcast joins or map-side aggregation to minimize shuffle overhead.

- Caching and persistence: Leverage Spark's caching and persistence mechanisms strategically to avoid unnecessary re-computation and improve performance. Identify portions of your data or intermediate results that can be cached in memory or persisted on disk for reuse across stages or iterations.

- Partitioning and parallelism: Ensure that the data is accurately partitioned and parallelism is effectively utilized. Evaluate the number of partitions and adjust it based on the size of your data and available resources. Consider repartitioning or merging data to optimize the balance between parallelism and resource consumption.

- Logging and debugging: Enable detailed logging and leverage Spark's logging capabilities to identify potential issues. Review the logs for any error messages, warnings, or exceptions. Additionally, utilize Spark's debugging features, such as breakpoints or interactive debugging, to step through your application code and pinpoint performance bottlenecks.

- Hardware and cluster configuration: Assess the hardware infrastructure and cluster configuration. Ensure that the cluster is appropriately sized and provisioned with sufficient resources. Verify network connectivity, disk I/O performance, and overall cluster health. Make necessary adjustments to the hardware and configuration settings as required.

- Experiment and benchmark: Conduct experiments and benchmarks to compare the performance of different approaches, configurations, or optimizations. This iterative process can help identify the most effective solutions for your specific workload.

13. Can you list and explain more about Hadoop's components?

Expected answer: Hadoop is an open-source framework designed to process and store large amounts of data across distributed clusters of computers. It was created to address the challenges of handling massive datasets that traditional systems cannot effectively process. The framework provides a scalable and reliable distributed data processing and analysis platform.

Hadoop consists of several core components that work together to enable its functionality. Let's explore each component in more detail:

- Hadoop Distributed File System (HDFS): HDFS represents a distributed file system that stores and manages massive datasets across a commodity hardware cluster. It has a master-slave architecture. The name “NameNode” is the master node and maintains the files' metadata and block locations. The DataNodes are slave nodes and store actual data blocks. HDFS breaks large files into blocks (typically 128MB or 256MB) and replicates each block multiple times across different DataNodes for fault tolerance. The default replication factor is three, meaning each block is stored on three different DataNodes.

- Yet Another Resource Negotiator (YARN): YARN is the resource management framework of Hadoop. It allows multiple processing engines to run on the same cluster and efficiently utilize cluster resources. YARN comprises two main components: the ResourceManager (RM) and the NodeManager (NM). The RM manages and allocates resources to applications based on their requirements. It keeps track of available resources and schedules tasks across the cluster. The NM runs on each node and manages resources (CPU, memory) on that node. It launches and monitors application containers and reports back to the RM. YARN provides a pluggable architecture that allows different application frameworks like MapReduce, Spark, and Hive to operate on top of it.

- MapReduce: MapReduce is a programming model and processing engine in Hadoop for parallel data processing. It breaks down a computational task into two stages: the Map stage and the Reduce stage. The Map stage takes input data and processes it in parallel across multiple nodes. It applies a user-defined map function to each input record and generates intermediate key-value pairs. The intermediate pairs are then partitioned and grouped based on their keys. In the Reduce stage, the intermediate results with the same key are processed by a user-defined reduce function to produce the final output. MapReduce automatically handles parallelization, data distribution, fault tolerance, and load balancing across the cluster.

- Hadoop Common: Hadoop Common has essential libraries and utilities other Hadoop components need. This includes the Hadoop Distributed File System (HDFS) client library that allows applications to interact with the HDFS and the YARN client library to submit and manage applications on the YARN framework directly. The Hadoop Common provides the configuration files, scripts, and command-line tools for managing and administering the Hadoop cluster. It ensures compatibility across different Hadoop distributions and provides a consistent programming model for developers. The Hadoop ecosystem consists of a wide range of tools and frameworks that complement and extend the capabilities of the Hadoop platform. Here's a brief overview of some popular tools in the Hadoop ecosystem:

- Data Access:

Apache Hive: Built on top of Hadoop, we have Apache Hive, a data warehouse infrastructure. It has a query that is like SQL and is called HiveQL, so users with the help of this can easily query and analyze some data stored in Hadoop with the help of SQL syntax. Hive translates HiveQL queries into MapReduce or Tez jobs for execution.

Apache Pig: Pig is a high-level scripting language designed for data analysis and processing on Hadoop. It provides a simple and expressive language called Pig Latin, which abstracts away the complexities of writing MapReduce jobs. Pig Latin scripts are compiled into a series of MapReduce jobs or executed using Apache Tez.

- Data Storage:

Apache HBase: HBase is a distributed, scalable, and column-oriented NoSQL database built on top of Hadoop. It provides real-time read/write access to large datasets. HBase is suitable for applications that require random access to large amounts of structured data and high write throughput.

- Data Integration:

Apache Sqoop: Sqoop is a tool that efficiently transfers bulk data between Hadoop and structured data stores, such as relational databases. It allows users to import data from databases into Hadoop (HDFS or Hive) and export data from Hadoop to databases.

Apache Kafka: Kafka is a distributed messaging system that enables high-throughput, fault-tolerant, and real-time data streaming. It is used predominantly to build real-time data pipelines and stream applications. Kafka acts as a publish-subscribe system where data producers publish messages to topics, and consumers subscribe to those topics to consume the data.

Apache Flume: Flume is a distributed, reliable, and scalable tool for collecting, aggregating, and moving large volumes of log data or event streams from various sources into Hadoop. It provides a flexible architecture with pluggable sources and sinks to handle diverse data sources and destinations.

Apache Chukwa: Chukwa is a data collection and monitoring system in the Hadoop ecosystem. It is designed to collect, aggregate, and analyze large volumes of log data that distributed systems generate. Chukwa provides a scalable and reliable real-time framework for monitoring and analyzing system logs.

- Data Serialization:

Java Serialization: Java Serialization is the default serialization mechanism in Java. It allows objects to be converted into a byte stream and vice versa. Hadoop uses Java Serialization by default for communication between different components, such as the serialization of Java objects when sending data between mappers and reducers in MapReduce jobs.

Apache Avro: Avro is a data serialization framework that provides a compact, efficient, and schema-based serialization format. Avro allows you to define the data schema using JSON, which makes it easy to read and interpret. It supports dynamic typing and schema evolution, making it suitable for evolving data structures and compatibility across different versions of data.

Apache Parquet: Parquet is a columnar storage format for efficient and high-performance analytics. It stores data in a column-wise manner, which enables faster query processing and better compression ratios. Parquet uses efficient compression algorithms and encoding techniques to optimize data storage and retrieval. It is commonly used with Apache Hive and Apache Impala for querying and analyzing data in the Hadoop ecosystem.

Apache ORC: ORC (Optimized Row Columnar) is another columnar storage format developed by the Apache community. It is optimized for large-scale analytics and provides improved performance and compression compared to traditional row-based formats. ORC supports predicate pushdown, column pruning, and other advanced optimizations, making it well-suited for interactive query processing.

Apache Thrift: Apache Thrift is a data serialization framework and protocol allowing efficient cross-language communication and data serialization. It provides a language-agnostic definition language called Thrift IDL (Interface Definition Language) to define the data types and services.

JSON and XML: Although not specific to the Hadoop ecosystem, JSON (JavaScript Object Notation) and XML (eXtensible Markup Language) are widely used data interchange formats. They often represent structured data that needs to be transmitted or stored in a human-readable format. Hadoop tools and frameworks like Apache Pig and Apache Hive support processing JSON and XML data.

- Data Intelligence:

Apache Spark: Spark is a fast and general-purpose cluster computing framework that provides in-memory data processing capabilities. It supports various programming languages (such as Scala, Java, and Python) and offers high-level APIs for batch processing, real-time streaming, machine learning, and graph processing. Spark can read data from Hadoop Distributed File System (HDFS) and other sources.

Apache Drill: Apache Drill is a distributed SQL query engine designed for the interactive analysis of large-scale datasets. It provides a schema-free, SQL-like query language that enables users to query and analyze data stored in various formats and sources, including files, NoSQL databases, and relational databases. Drill supports on-the-fly schema discovery, which allows it to handle semi-structured and nested data. It leverages a distributed architecture to perform parallel query execution, making it suitable for exploratory and ad-hoc data analysis tasks.

Apache Mahout: This is a distributed type of machine learning library used for scalable and efficient implementation of different machine learning algorithms. It enables users to build and deploy scalable machine-learning models using large datasets. Mahout supports batch processing and real-time streaming, making it suitable for a wide range of use cases, from recommendation systems to clustering and classification tasks.

Apache Flink: Flink is a stream processing framework with low-latency and high-throughput data processing capabilities. Using a unified API, it supports event-driven, real-time data processing and batch processing. Flink can efficiently process large-scale data streams and perform real-time analytics.

- Data management, orchestration, and monitoring:

Apache Oozie: Oozie is a workflow scheduler system that allows users to define and manage data processing workflows in Hadoop. It provides a way to coordinate and schedule various tasks and jobs within a workflow, including MapReduce, Hive, Pig, and Spark jobs. Oozie supports both time-based and event-based triggering of workflows and provides a web-based interface for managing and monitoring workflows.

Apache Falcon: Falcon is a data management and governance tool that simplifies Hadoop's management and lifecycle of data pipelines. It provides a way to define, schedule, and orchestrate data workflows, ensuring the reliability and timeliness of data processing. Falcon offers data replication, retention policies, data lineage, and metadata management capabilities.

Apache Airflow: Airflow is an open-source platform for orchestrating and scheduling - workflows. It provides a way to define and manage complex data pipelines as directed acyclic graphs (DAGs). Airflow supports various tasks and integrates with different data processing frameworks, allowing users to create end-to-end workflows for data ingestion, processing, and analysis.

Apache NiFi: NiFi is a data integration and routing tool that provides a visual interface for designing and managing data flows. It offers robust data routing, transformation, and enrichment capabilities, making it easy to ingest, process, and distribute data across different systems and platforms. NiFi supports real-time data streaming and provides built-in monitoring and alerting features.

Apache Ambari: Ambari is a cluster management and monitoring tool designed specifically for Hadoop. It provides a web-based interface for provisioning, managing, and monitoring Hadoop clusters. Ambari simplifies the deployment and configuration of Hadoop components, provides health checks and alerts, and offers a centralized view of cluster performance and resource utilization.

Apache ZooKeeper is a distributed coordination service and open-source project in the Apache Hadoop ecosystem. It provides a reliable and highly available infrastructure for distributed applications to coordinate and synchronize their activities in a distributed environment.

14. Can you define and elaborate on the Block & Block Scanner of HDFS?

Expected answer: In HDFS, a block is the basic file storage unit. It represents a fixed-size chunk of data stored and managed by HDFS. When a file is stored in HDFS, it is divided into blocks, and each block is replicated across multiple data nodes in the cluster for fault tolerance.

The block size in HDFS is typically large, commonly set to 128 megabytes (MB) or 256 MB, although it can be configured per system requirements. HDFS reduces the metadata overhead and improves data transfer efficiency using large block sizes.

Block Scanner is a component in HDFS that periodically verifies the integrity of data blocks stored on the data nodes. It ensures that the data blocks are not corrupted by comparing their checksums with the expected checksums stored in the block metadata. If a block is corrupted, the block scanner requests the data node to retrieve a replica from another data node to repair or replace the corrupted block.

The Block Scanner operates in the background and runs independently on each data node in the HDFS cluster. It scans several blocks in each scanning cycle, and the scanning frequency can be configured. The Block Scanner helps to detect and repair data corruption proactively, ensuring data reliability and minimizing the risk of data loss.

15. Can you elaborate on Secondary NameNode and define its functions?

Expected answer: In Hadoop, the Secondary NameNode is a component that works with the NameNode to provide fault tolerance and backup functionality. Despite its name, the Secondary NameNode does not act as a standby or backup for the NameNode. Instead, it assists the NameNode in checkpointing and maintaining the file system metadata.

The Secondary NameNode performs the following functions:

- Checkpointing: The Secondary NameNode helps create periodic checkpoints of the file system metadata stored in the NameNode. The NameNode keeps the entire file system namespace and block information in memory, which can be a potential point of failure. To alleviate this scenario, there is a regular copy retrieval to the Secondary NameNode, and the copy is from FsImage and edit log files of the NameNode. These edits log files are merged to create a new checkpoint. This checkpoint is then saved on the local disk of the

Secondary NameNode.

- Merging edit logs: Besides creating checkpoints, the Secondary NameNode is responsible for merging the edit log files generated by the NameNode. The edit log records all modifications to the file system, such as file creations, deletions, and modifications. By periodically merging these edit logs, the Secondary NameNode helps minimize their size and improve the efficiency of the NameNode during the startup process.

- Improved startup time: When the NameNode restarts, it must read and apply all the modifications recorded in the edit logs to restore the file system state. The Secondary NameNode's checkpointing and edit log merging functions help reduce the startup time by providing a consolidated and smaller set of edits to be processed during the NameNode restart.

- Data compaction: In older versions of Hadoop, the Secondary NameNode performs a compaction process where it merges the FSImage (file system image) and edits logs into a single file. However, this function is no longer relevant in recent versions of Hadoop because this process was optimized in the meantime.

Secondary NameNode performs crucial tasks related to checkpointing and log merging; it is not a backup or standby for the NameNode in case of failure. The Primary NameNode remains the single point of failure in HDFS. In modern Hadoop deployments, tools like HDFS High Availability (HA) and multiple NameNodes provide a more robust, fault-tolerant solution.

Possible challenges during the hiring of a Data Engineer

There are always challenges when hiring a new person, depending on the vocation and job requirements.

One major challenge is that the scope of the Data Engineer has become large and confusing. It should not be mixed up with the following related roles:

- Database Administrator (DBA) which is more focused on the creation and optimization of OLTP databases.

- Data Analysts who are typically more focused on driving business value by creating dashboards and building ad-hoc reports.

- Analytics Engineer which is similar to a Data Analyst’s role but with more of a software engineer’s skill-set (version control, CI/CD, use of Python/Scala/Java) and typically focuses on data warehousing SQL pipelines and optimization.

- Machine Learning Engineer who is skilled in deploying ML models built by Data Scientists into production. It requires a more deep understanding of statistics, algo’s, and math. Some Data Engineers possess this knowledge but for a medium to large-sized data team, it should be a role on its own.

It needs to be emphasized that hiring managers/employers quite often have the habit of offering a lower salary, or salary below market value to data engineers. This type of ‘challenge’ belongs on the spectrum side of the hiring managers, who mistakenly focus intensely on anything except the long-term benefit of having a skilled data engineer in the company.

What distinguishes a great Data Engineer from a good one?

Selecting the right candidate that’s best for the Data Engineer role can be tricky, especially if at least two candidates have similar experience and expertise. However, one will always stand out, through in-depth knowledge, mastering technical skills, and a proactive, dynamic way of thinking.

The great Data Engineer:

-

Creates solutions that easily maintained.

For example, if a manual data mapping is required to get the data cleansed, does the developer hard code the values or create a config file that can be easily updated?

-

Understands business needs and doesn’t over-engineer solutions.

It is easy to fall in the trap of building more complex solutions than needed. This could for example be building a near-real time streaming pipeline when the actual data refreshness need is daily.

Let’s not forget that being a team player is especially important because a data engineer will need to regularly communicate with others in the team who have different roles (Data Scientist, Data Analyst, ML Engineer etc.) and other company teams.

The value of Data Engineering

Any business can benefit from data engineering because they are enabling companies to become more data-driven. It might sound vague but data engineering is the foundation you need to make data easily consumed and accurate and enable advanced analytics and machine learning use cases. As mentioned above, 80% of the effort in any data project is spent on data engineering.

In summary, Mehmet explains:

“Data engineers are responsible for designing the overall flow of data through the company and creating and automating data pipelines to implement this flow.”

With such an individual or a team, a company can trust and rely on data and know it’s in good hands and those data engineers will collect, store and process the data flawlessly which is the first step to becoming a data-driven company.

Norsk

Norsk